Miscellaneous notes

on various topics.

Technology, Patterns of Use, Interfaces & Mediums, Aesthetics & Forms,

Concepts & Ideas, Abstract Structures, Subtle Influences,

Artful Function, Oddities, Hidden Connections

There’s an interesting parallel between interacting with AI and riding horses - both require learning to communicate and synchronize with an entity that thinks and reacts independently to stimuli.NASA actually developed a whole framework on this idea in 2003, the H-Metaphor.

The core issue the H-Metaphor tries to address is how to satisfyingly exploit automated and "agentic" machines while retaining control from human operators. How do we split the roles?In many ways, these are questions already explored throughout the history of horse riding.

A standard car has 1:1 I/O control system —steering, acceleration, and braking translate directly into predictable outcomes. But a horse introduces a semi-autonomous control model, where its agency and behavioral variability act as a non-linear feedback loop

You don't need to worry about hitting a tree if you're riding a horse, it is part of its "presets" as an autonomous agent. You can focus on higher level tasks and let it handle it for you.Although even horse-riding has been subject to debates about control paradigms.

There was even a clash of sorts in the 19th century between 2 French riding masters:Baucher: full dominance over the horse - complete submission & precision through micromanaged flexion exercisesd’Aure: emphasis on practicality and natural instincts.

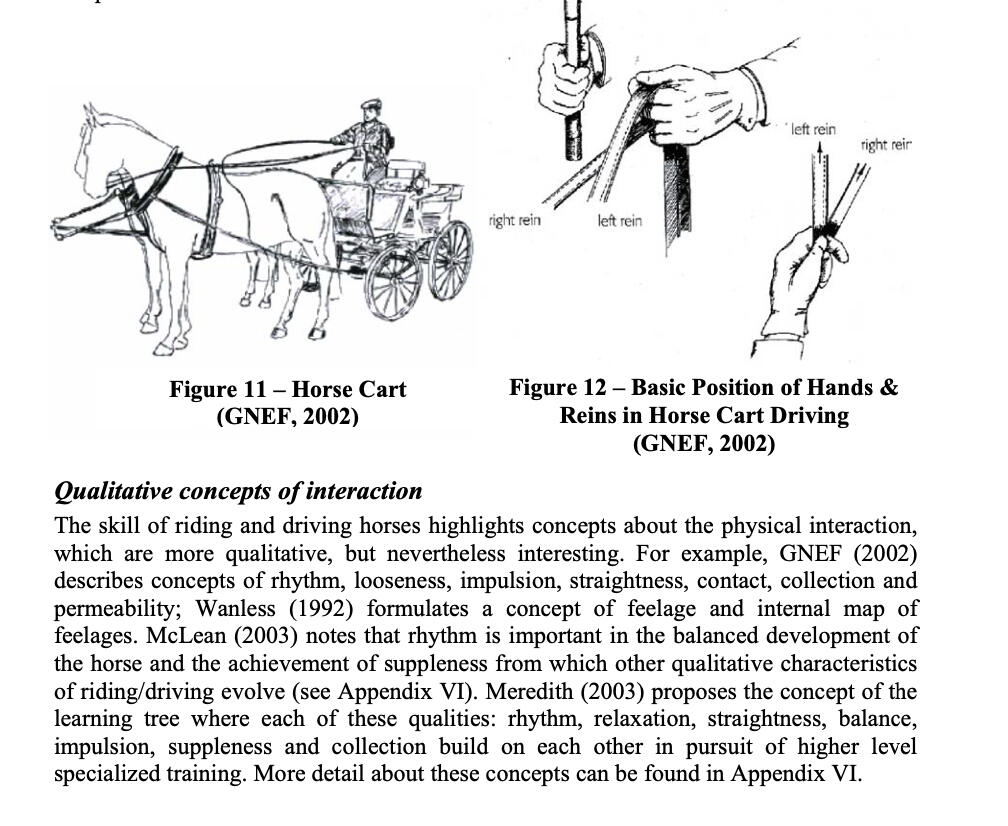

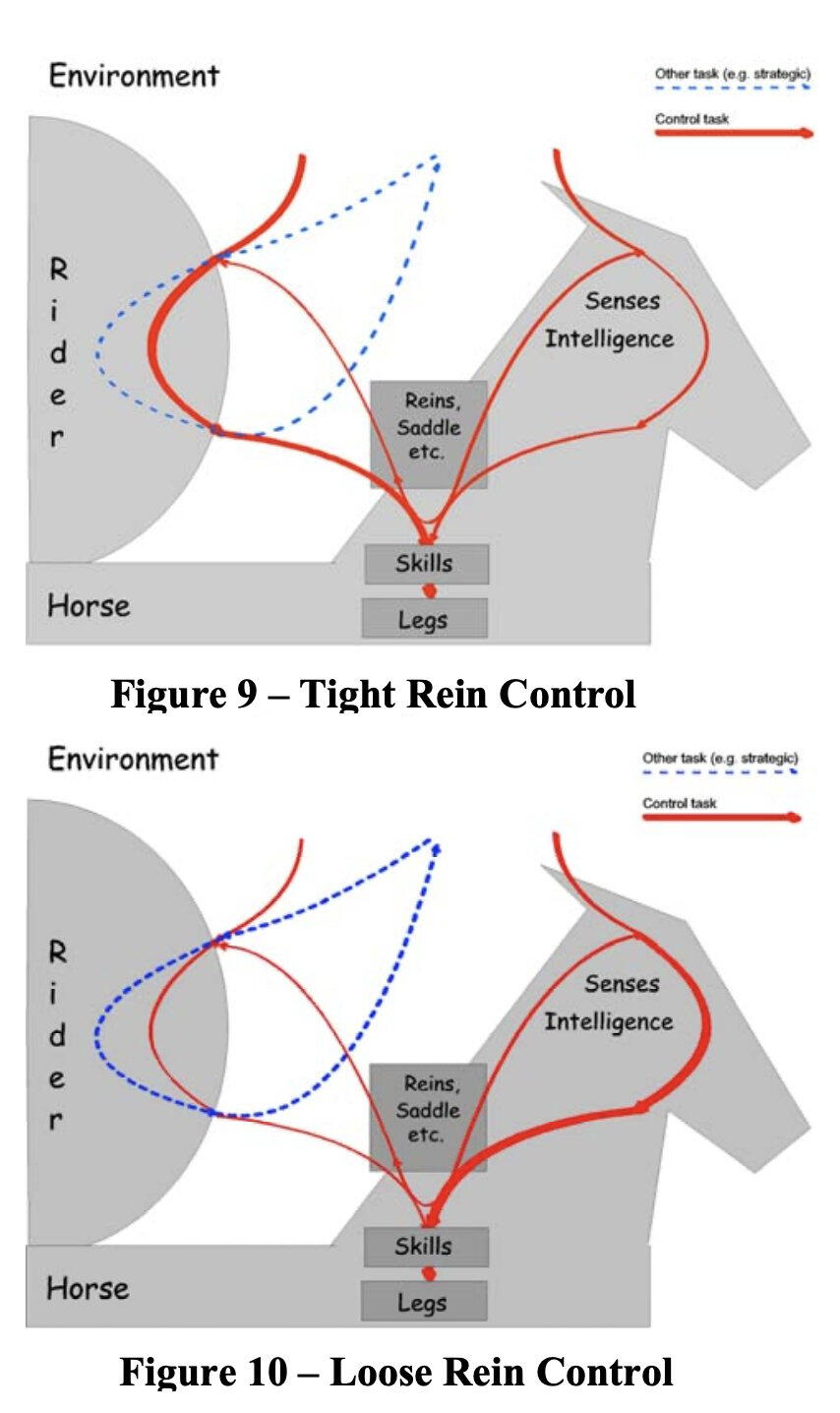

In the H-Metaphor paper, NASA points that riders have many types of continuous & discrete inputs available to control the horse behavior: pressure on the legs, seat movements, weight shift, a few voice commands.And communication goes both ways, horses send haptic signals back

The point is to come up with better control paradigms for autonomous systems. NASA's paper is focused on vehicles specifically, and they mention a very cool concept of a trainable, personalized control device with haptic feedback and shifting level of autonomy based on grip

But beyond vehicles, we should explore new ways to communicate with software specifically. I'm interested in unconventional interfaces for automated processes because I believe this is an overlooked bottleneck in the integration of AI for some productive cases.

In many ways, it can seem contradictory to seek more control from autonomous systems. Or to try to introduce more human imprecision in what appears like rigorous processes. But sometimes, the imprecision is the point.

Chatting with an AI and hoping for a good result is cool but it requires a lot of precision, and becomes tiresome for small tasks. There are other ways to express our will. Even between humans, most of our communication is nonverbal: we wince, we sigh, we bite our lips, we modulate the speed of our speech...We impact the world through many interfaces.